Building Agency in an Intelligent Future

Unpacking Power, Participation, and the Evolving Social Contract

We've entered a new phase of human evolution, one not marked by muscle or mind, but by shared cognition. The systems around us are no longer just executing our commands. They're learning from us, anticipating needs, nudging decisions, and more subtly redefining the extent of our agency.

From the complex traffic flows to global healthcare prioritization, these AI-powered infrastructures will do more than process data; they'll shape how the world responds to us.

But as systems grow in complexity and intelligence, so must our understanding of what it means to be an agent in a hybrid age of abundant intelligence.

🏛️ From Economic Agency to System Stewardship

For most of modern history, being an "agent" meant participating in the economy. Economic agency referred to the ability to work, earn income, spend, and produce measurable value. It was what placed you inside society, how you made a living and how your contribution was quantified, usually in GDP or salary.

But that definition is showing signs of stress.

Today, much of the value generated online comes not from paid labour, but from behavior. Every time you browse a website, react to a post, or generate data by moving through a city, you're creating value for platforms that harvest, sort, and monetize it. This new model has been called behavioural surplus, a term coined by Shoshana Zuboff to describe the data trails that feed predictive systems.

The challenge is this: while we are contributing value, we are not necessarily controlling it. Our actions fuel the system, but they rarely influence its design. In other words, we're part of the machine, but not at the table. Economic agency, once tied to visible output, is becoming increasingly invisible, manifesting in data, attention, and influence, but often without the usual levers of control.

To regain agency, we need a new frame: system stewardship.

⚙️Where Power Lives Now in Hybrid Systems

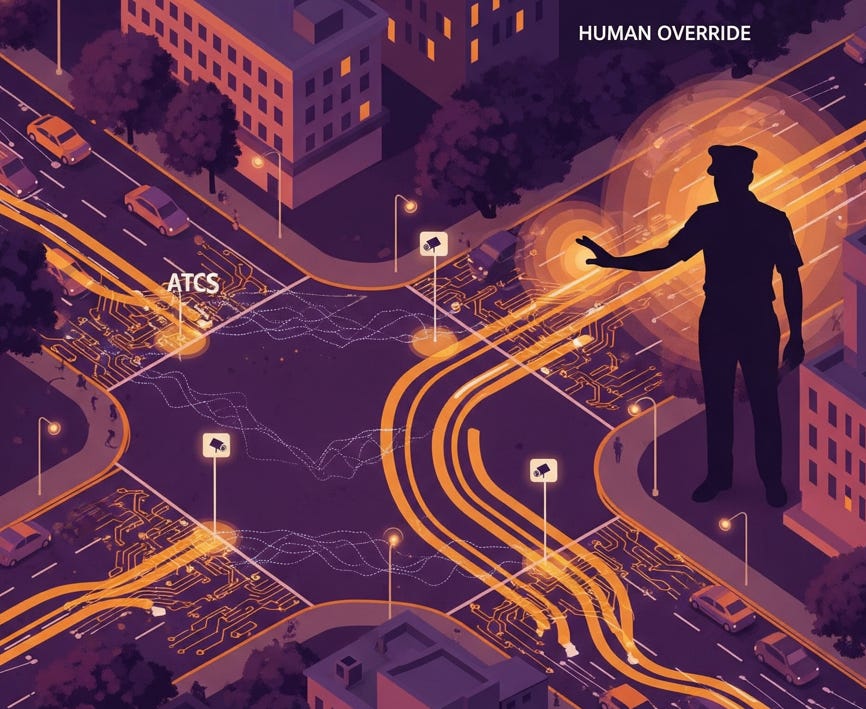

Socio-technical systems are environments where human judgment, machine logic, and environmental feedback all operate together. Consider Bengaluru's Vehicle Actuated Control (VAC) and Adaptive Traffic Control Systems (ATCS). These aren't just passive tools; they actively shape how traffic moves.

They surface options (like a green light duration), automate decisions (signal changes based on volume), and set the conditions for how people behave (which lane flows faster). In many cases, they determine what is visible, what is prioritized, and what is excluded.

Power in these systems is distributed, but not necessarily shared. It moves through layers of code, data, infrastructure, and corporate governance. And because these layers are often opaque, most participants don't know how their actions feed the machine, or how the machine acts in return.

In this context, participating in society means participating in systems. But participation is no longer direct. It's being mediated by algorithms, sensors, and feedback loops.

This shift calls for a new kind of fluency: not just the ability to use tools, but to understand how tools use us.

🤝 The Human-AI Social Contract: Negotiating Control

If we're going to live alongside intelligent systems, then we need more than interfaces. We need agreements. The Human-AI Social Contract is a way of thinking about how responsibility, decision-making, and oversight are distributed between humans and machines.

It asks simple but profound questions:

What decisions should always remain human?

What tasks can we safely delegate to machines?

Which domains require co-governance?

Consider Bengaluru's traffic systems. Ideally, the ATCS uses AI to handle the real-time flow of signals, drawing on cameras and congestion data to optimize traffic across vast corridors. Yet, anyone who has driven in Bengaluru knows that traffic police often override these automated signals, especially during peak hours or specific bottlenecks. This constant manual intervention highlights the still-evolving nature of our Human-AI Social Contract.

The "bugs" in Bengaluru's system aren't always technical glitches; they're often the result of the sheer complexity of reality. The system struggles with:

Heterogeneous Traffic: A mix of cars, bikes, autos, and pedestrians, combined with a lack of strict lane discipline, makes it incredibly challenging for algorithms designed for more orderly traffic to accurately detect and manage flow.

Unforeseen Events: A sudden vehicle breakdown, an impromptu protest, or an unexpected VIP movement can instantly create chaos that the automated system isn't programmed to handle. A human officer can assess and respond dynamically.

Sensor Limitations and Data Quality: Glare, dust, heavy rain, or simply the immense density of vehicles can compromise sensor accuracy, leading to flawed data input for the AI.

Algorithm Learning: These systems are still learning the unique, chaotic patterns of Bengaluru's roads. They're constantly being fine-tuned, and during this learning phase, they'll make suboptimal decisions.

In these moments, the traffic police become crucial system stewards. They step in to exercise human judgment, demonstrating that while tasks can be delegated to machines, the ultimate responsibility for public good, clearing a stubborn bottleneck, managing a sudden surge, ensuring safety still rests with humans.

The contract isn't a one-time agreement; it's a living negotiation. As both human and machine intelligences evolve, we must revisit where authority lies. That means designing systems that are transparent, contestable, and open to revision.

Without such a social contract, we risk handing over more than tasks, we risk offloading accountability.

💡 Rethinking Value in Systems of Shared Cognition

As systems become smarter, we must rethink what we mean by value. Traditional economics values what can be priced: labour, goods, capital. But hybrid systems generate a different kind of value. They produce coordination, resilience, trust, and knowledge qualities that don't show up in quarterly reports, yet form the backbone of society.

For example, a robust traffic system that reduces overall commute times across Bengaluru may not maximize short-term individual profit, but it offers long-term societal resilience and efficiency. A collaborative urban planning app may not produce goods, but it enhances civic trust and social cohesion.

To design for such value, we need ethical frameworks that align incentives with public good. That means holding system designers accountable, not just for functionality, but for impact. It also means including the public in shaping the objectives of the systems that govern them.

In short, we must shift from asking "what can this system do?" to "who does it serve, and how?"

🧠 Becoming System Fluent: The New Literacies of Agency

If agency is shifting from economic output to system stewardship, then we need new skills to match. Among them:

System thinking: The ability to see interconnections between technology, policy, behavior, and design, recognizing why Bengaluru's traffic might be chaotic despite smart signals.

Tech literacy: Understanding how decisions are made within technical systems and how to question them—for example, knowing why a certain signal timing might be suboptimal.

Ethical reasoning: Recognizing the trade-offs embedded in optimization goals—do we prioritize emergency response, public transit, or private vehicles in traffic flow?

Participatory design: Contributing to the creation of systems, not just adapting to them—advocating for better signal algorithms or clearer road markings.

These literacies are no longer optional but will soon be survival skills in the socio-technical future. Imagine a future voter who cannot understand how algorithmic policy models work. Or a worker who cannot see how their behavior feeds performance surveillance tools. Without these literacies, agency becomes performative. With them, it becomes transformative.

🎯 Shaping the Systems That Shape Us

We're not at the edge of a technological revolution. We're in the middle of a systemic evolution. Human and machine intelligence are no longer separate categories. They are co-creating the architecture of everyday life. From traffic management to healthcare, from education to governance, our futures are being built not just by tools, but by systems that sense, think, and act.

In this world, agency isn't about having power over machines. It's about having influence within the systems that define how power flows.

Join the Observer Family in shaping this future. Dive into the comments, ask challenging questions, share your own experiences, and connect with fellow members in our chat. Your active participation is the real force that transforms observation into impact.

Let's build this future wisely, together.